CTE In SQL

Guide to SQL Common Table Expressions (CTEs)

Guide to SQL Common Table Expressions (CTEs)

If you’re new to SQL, you’ve probably come across complex queries that are hard to read and understand. This is where CTEs, or Common Table Expressions, can make your life easier. In this guide, we’ll explain what a CTE is, how it works, why you should use it, and walk you through examples step by step.

What is a CTE?

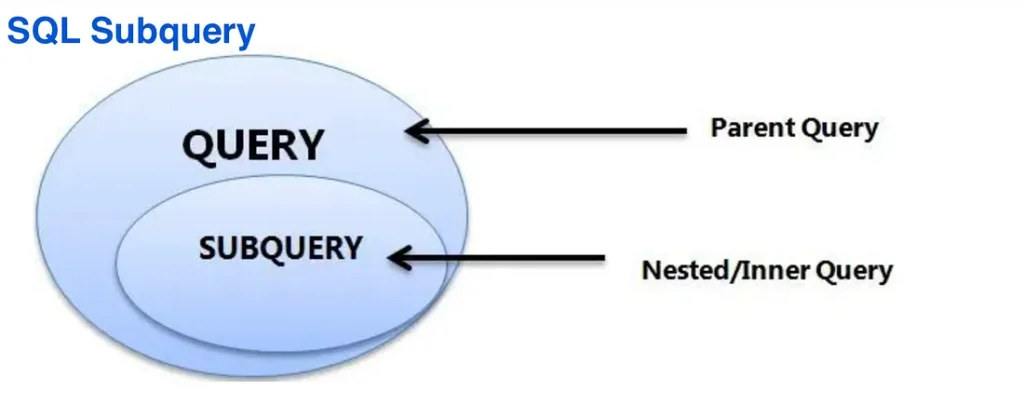

A Common Table Expression (CTE) is a temporary result set in SQL. You can think of it as a shortcut or a temporary “mini table” that helps you break a complex query into smaller, more readable steps. The CTE only exists for the duration of the query in which it is defined.

CTEs are defined using the WITH keyword and can be reused within the same query.

How to Write a CTE

The basic structure of a CTE looks like this:

— Your query goes here

)

SELECT *

FROM CTE_name;

Breaking it down:

WITHKeyword: Used to define the CTE.- CTE Name: A descriptive name (e.g.,

CTE_name) for your CTE. - Query: A SELECT statement inside the parentheses that creates the temporary result set.

- Usage: The CTE can be referenced like a table in the main query.

Why Use a CTE?

CTEs offer several advantages:

- Improves Readability: Breaks complex queries into smaller, manageable steps.

- Reduces Repetition: Avoids repeating the same logic multiple times in the query.

- Organizes Logic: Modularizes queries, making them easier to debug and maintain.

- Enables Recursion: Helps with hierarchical data like organizational charts or family trees.

Example with Visual Explanation

Scenario:

You have an Employees table:

| EmployeeID | Name | Department | Salary |

|---|---|---|---|

| 1 | Alice | HR | 60000 |

| 2 | Bob | IT | 80000 |

| 3 | Charlie | HR | 55000 |

| 4 | Diana | IT | 75000 |

Task: Find all employees whose salary is above the average salary in their department.

Query Using a CTE:

Department,

AVG(Salary) AS AvgSalary

FROM Employees

GROUP BY Department

)

SELECT

e.Name,

e.Department,

e.Salary

FROM Employees e

JOIN DepartmentAverages da

ON e.Department = da.Department

WHERE e.Salary > da.AvgSalary;

Step-by-Step Explanation:

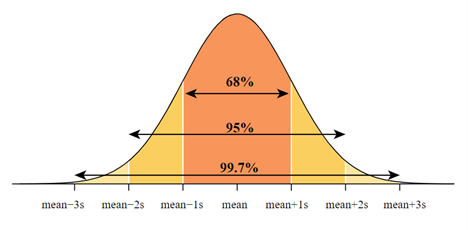

- Step 1: CTE (DepartmentAverages)

The first part of the query calculates the average salary for each department:

| Department | AvgSalary |

|---|---|

| HR | 57500 |

| IT | 77500 |

This result is stored in the temporary result set named DepartmentAverages.

- Step 2: Main Query

The main query:

- Joins the

Employeestable with theDepartmentAveragesCTE on theDepartmentcolumn. - Compares each employee’s salary with the average salary of their department.

Intermediate Result After the Join:

| EmployeeID | Name | Department | Salary | AvgSalary |

|---|---|---|---|---|

| 1 | Alice | HR | 60000 | 57500 |

| 2 | Bob | IT | 80000 | 77500 |

| 3 | Charlie | HR | 55000 | 57500 |

| 4 | Diana | IT | 75000 | 77500 |

- Step 3: Filter Employees

The query filters out employees whose salary is not greater than the average salary. The final result is:

| Name | Department | Salary |

|---|---|---|

| Alice | HR | 60000 |

| Bob | IT | 80000 |

Why This Approach is Better

Using a CTE simplifies the query by:

- Isolating the logic for calculating department averages in its own step.

- Making the main query easier to read and focus on filtering.

Without the CTE, you’d need to repeat the logic for calculating department averages in the main query, making it more complex and harder to maintain.

Using Multiple CTEs

You can define multiple CTEs in a single query by separating them with commas. Each CTE can build upon the previous ones.

Scenario:

You have the same Employees table.

Task: Find the total salary of employees in departments where the average salary is greater than $60,000.

Query Using Multiple CTEs:

SELECT

Department,

AVG(Salary) AS AvgSalary

FROM Employees

GROUP BY Department

),

HighPayingDepartments AS (

SELECT

Department

FROM DepartmentAverages

WHERE AvgSalary > 60000

)

SELECT

e.Department,

SUM(e.Salary) AS TotalSalary

FROM Employees e

JOIN HighPayingDepartments hpd

ON e.Department = hpd.Department

GROUP BY e.Department;

- Step 1: Calculate Department Averages

The first CTE (DepartmentAverages) calculates average salaries:

| Department | AvgSalary |

|---|---|

| HR | 57500 |

| IT | 77500 |

| Sales | 90000 |

- Step 2: Identify High-Paying Departments

The second CTE (HighPayingDepartments) filters departments where the average salary is greater than $60,000:

| Department |

|---|

| IT |

| Sales |

- Step 3: Calculate Total Salary

The main query joins theEmployeestable withHighPayingDepartmentsand calculates the total salary for each department:

| Department | TotalSalary |

|---|---|

| IT | 155000 |

| Sales | 90000 |

Recursive CTEs

Recursive CTEs are especially useful for working with hierarchical data, such as organizational charts or folder structures.

Scenario:

You have an Employees table that includes reporting relationships:

| EmployeeID | Name | ManagerID |

|---|---|---|

| 1 | Alice | NULL |

| 2 | Bob | 1 |

| 3 | Charlie | 2 |

| 4 | Diana | 2 |

Task: Find the entire hierarchy of employees reporting under Alice.

Recursive CTE Query:

— Anchor Query

SELECT EmployeeID, Name, ManagerID

FROM Employees

WHERE ManagerID IS NULL

UNION ALL

— Recursive Query

SELECT e.EmployeeID, e.Name, e.ManagerID

FROM Employees e

INNER JOIN EmployeeHierarchy eh

ON e.ManagerID = eh.EmployeeID

)

SELECT * FROM EmployeeHierarchy;

Step-by-Step Process:

Anchor Query: Finds Alice (ManagerID = NULL).

Recursive Query: Finds employees reporting to Alice (Bob) and then employees reporting to Bob (Charlie and Diana).

Final Result:

| EmployeeID | Name | ManagerID |

|---|---|---|

| 1 | Alice | NULL |

| 2 | Bob | 1 |

| 3 | Charlie | 2 |

| 4 | Diana | 2 |

Key Takeaways

- CTEs make queries modular, readable, and reusable.

- Multiple CTEs allow complex logic to be split into manageable steps.

- Recursive CTEs are perfect for hierarchical data.

Practice using CTEs to simplify complex queries and better organize your SQL logic.